Google pushes conservative news sites far down search lists

09/23/2020 / By News Editors

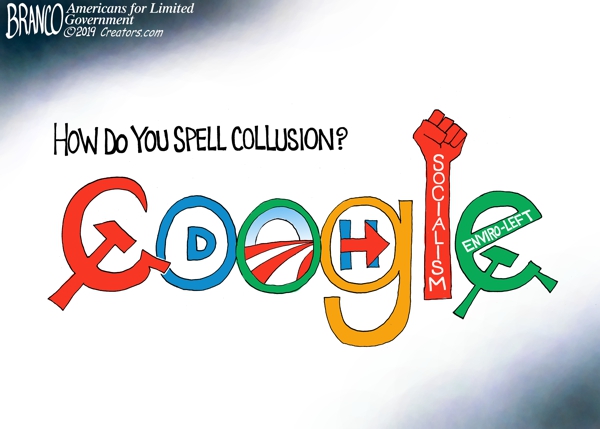

It has long been feared that Google, which controls almost 90% of U.S. Internet search traffic, could sway an election by altering the search results it shows users. New data indicate that may be happening, as conservative news sites including Breitbart, the Daily Caller, and the Federalist have seen their Google search listings dramatically reduced.

(Article by Maxim Lott republished from RealClearPolitics.com)

The data come from the search consultancy Sistrix, which tracks a million different Google search keywords and keeps track of how highly different sites rank across all the search terms.

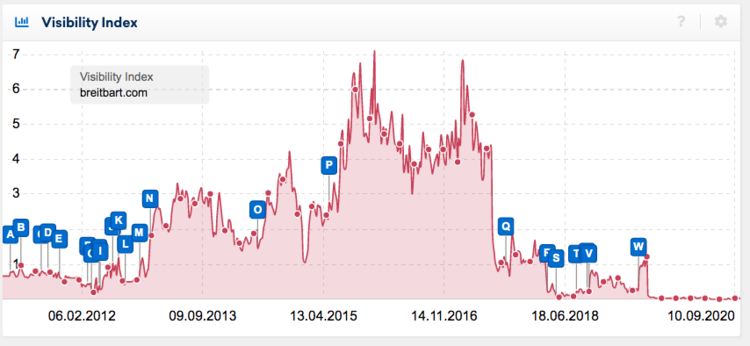

The tracker shows that Google search visibility for Breitbart first plunged in 2017, before falling to approximately zero in July 2019:

Breitbart saw that stark reduction in search, even as little else in the news outlet’s reporting model changed. Other conservative news sites, such as the Daily Caller, also were de-ranked at similar times.

Both Breitbart and the Daily Caller have confirmed that their Google traffic fell dramatically as their search rankings fell.

Internal Google files have hinted at such action. In 2018, the Daily Caller reported on a leaked exchange the day after President Trump’s 2016 election win. In it, employees debated whether Breitbart and the Daily Caller should be buried.

“This was an election of false equivalencies, and Google, sadly, had a hand in it,” Google engineer Scott Byer wrote, according to the documents obtained by the Daily Caller.

“How many times did you see … items from opinion blogs (Breitbart, Daily Caller) elevated next to legitimate news organizations? That’s something that can and should be fixed.” Byer wrote.

He added, “I think we have a responsibility to expose the quality and truthfulness of sources.”

In the leaked exchange, other Googlers pushed back, saying that it shouldn’t be Google’s role to rank sources’ legitimacy — and that hiding sites would only add to distrust.

Google did not respond to a request for comment about the data presented here.

An expert in search engine optimization pointed RealClearPolitics to a public Google document from 2019 outlining how the company now employs humans to go through webpages and rate them based on “Expertise/Authoritativeness/Trustworthiness.” “Google has acknowledged they use human search quality raters who help evaluate search results,” said Chris Rodgers, CEO and founder of Colorado SEO Pros.

Google does not directly use such ratings to rank sites, but “based on those ratings Google will then tweak their algorithm and use machine learning to help dial in the desired results,” Rodgers explained.

The Google guidelines instruct raters to give the “lowest” ranking to any news-related “content that contradicts well-established expert consensus.” And how does one determine “expert consensus”? The Google guidelines repeatedly advise raters to consult Wikipedia, which it mentions 56 times: “See if there is a Wikipedia article or news article from a well-known news site. Wikipedia can be a good source of information about companies, organizations, and content creators.”

The document cites the Christian Science Monitor as an example. “Notice the highlighted section in the Wikipedia article about The Christian Science Monitor newspaper, which tells us that the newspaper has won seven Pulitzer Prize awards,” the document tells raters. “From this information, we can infer that the csmonitor.com website has a positive reputation.”

The reliance on Wikipedia could partly explain the de-rankings, as the crowdsourced encyclopedia calls Breitbart “far right” and alleges that the Daily Caller “frequently published false stories.” But Wikipedia’s co-founder, Larry Sanger, recently wrote an essay about how “Wikipedia is badly biased.”

In addition to the human ratings used to test algorithms, Google also has human-maintained blacklists — but they are supposed to be very limited. In congressional testimony this summer, Rep. Matt Gaetz asked Google CEO Sundar Pichai if the search engine manually de-ranked websites. Gaetz cited Breitbart, the Daily Caller, as well as WesternJournal.com and Spectator.org, two other conservative news sites that Sistrix data show have been nearly removed from Google searches.

Pichai responded that his company only removes sites if they are deemed to be “interfering in elections” or conveying “violent extremism.”

It remains unclear why the conservative sites were de-ranked. “Only Google can truly know why these sites have seen ranking losses,” SEO expert Rodgers said.

Jeremy Rivera, of JeremyRiveraSEO.com, told RealClearPolitics that there are several possible reasons for de-ranking. Both Breitbart and the Daily Caller have “low-quality” sites linking to them, he said, and they host many ads, which create slow page-load times. “Page-load speed is a direct ranking factor,” he said.

Several news outlets, on both the left and right, have avoided the near-total blackout experienced by Breitbart and the Daily Caller, but saw their search listings reduced significantly. Among them is The Federalist, which saw search listings fall dramatically this spring after coming under fire for running articles that critics said downplayed the danger of COVID-19.

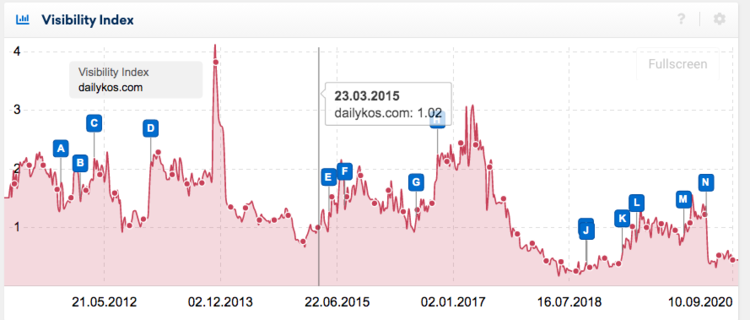

Some left-leaning news sites were docked too.

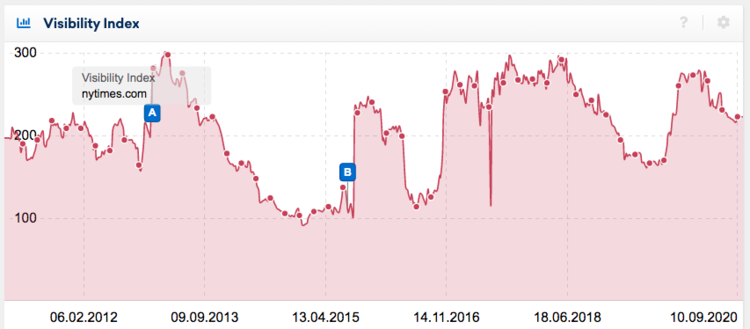

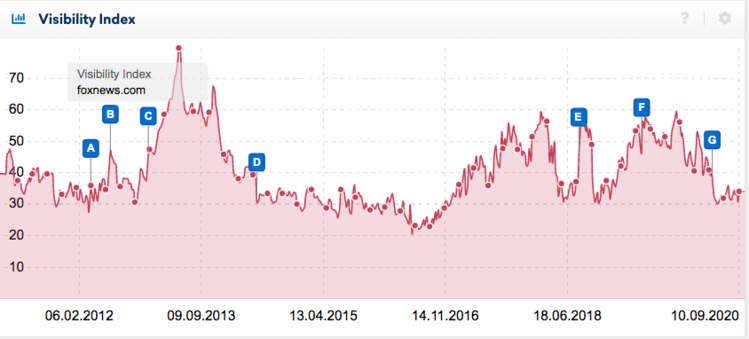

Other major sites, such as the Wall Street Journal, the Washington Post, and CNN, have not seen dramatic changes in rankings, according to Sistrix data.

Some observers say the trends show innovation at work, as companies try to present users with the highest-quality information. “These are inherently subjective questions,” said Berin Szóka of TechFreedom, noting that the First Amendment gives “complete discretion of private media companies, which includes Google. … They have the same right to decide what content to carry that Breitbart and Fox do.”

Others worry that the near-blacklisting of conservative outlets could tip election outcomes this year. A 2015 peer-reviewed study in the Proceedings of the National Academy of Sciences estimated that a search engine could sway more than 10% of undecided voters in an election simply by altering what results are shown.

“Such manipulations are difficult to detect, and most people are relatively powerless when trying to resist sources of influence they cannot see,” the authors warn. “When people are unaware they are being manipulated, they tend to believe they have adopted their new thinking voluntarily.” The paper further suggests that Google’s 87% market share is a concern. While competitors are growing in popularity, their market share numbers remain low: Microsoft’s Bing has 7.2% of the pie, and DuckDuckGo has 1.75%.

“Because the majority of people in most democracies use a search engine provided by just one company … election-related search rankings could pose a significant threat to the democratic system of government,” the paper concludes.

Read more at: RealClearPolitics.com and EvilGoogle.news.

Tagged Under: Big Tech, Censorship, conservatives, conspiracy, elections 2020, Google, politics, propaganda, search engines

RECENT NEWS & ARTICLES

COPYRIGHT © 2017 SEARCH ENGINE NEWS